Although I'm German switching between English and German in posts is not very helpful for Non-Germans I think. So I stick to English 😃

This case is clear to me:

Every client (💻️) is sending two requests on every folder action inside of the web client to /grommunio.php?subsystem=webapp_0815XXX0815.

This action is passed through my nginx reverse proxy (⚙️) to grommunio's nginx (📩) (I can see that from the logs, BUT:

As logs are written after success/failure and not at time of the request I started to add a little "echo to file" routing at the beginning of grommunio.php to know whether the file is called and how often.

So far, so good.

My nginx reverse proxy (⚙️) is closing the upstream request to grommunio's nginx (📩) after the given amount of seconds and grommunio's nginx (📩) is logging 499 (Client closed connection) which is true. Grommunio's nginx (📩) is reporting this error upstream timed out (110: Connection timed out) while reading response header from upstream in /var/log/nginx/nginx-web-error.log.

And why? Because the PHP requests take way too long.

After logging in ALL requests to grommunio.php are getting through fast and reliable. Always.

As soon as I start clicking through three or four folders the requests get into a queue and nginx is waiting for the timeout because the PHP processes are finishing veeeeery late. And again – the mailbox is mostly empty.

Some eye candy:

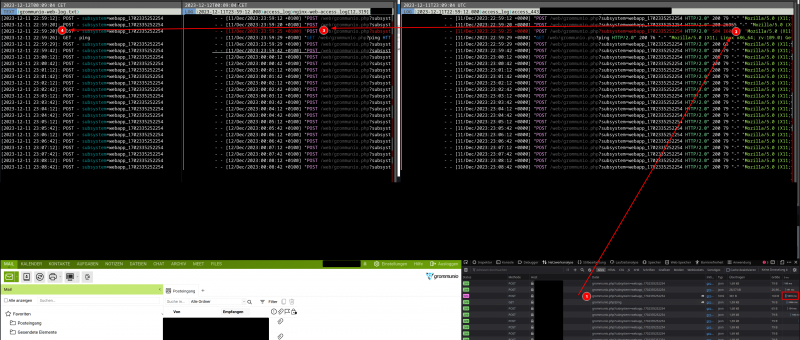

Call of a folder in web client results in two requests of grommunio.php where the second one did not finish in the 5s timeframe (I set this for testing purposes):

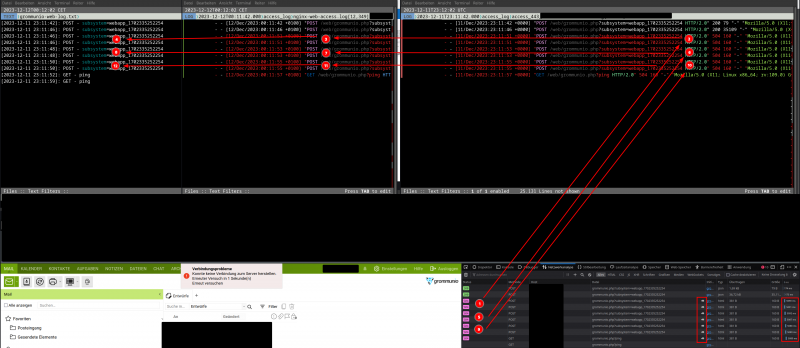

Same procedure with clicking through some more folders:

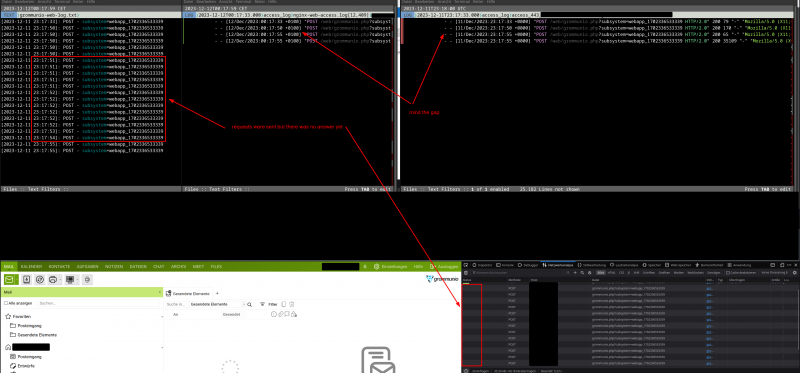

I increased the timeout and as you can see the requests are "stuck" but were sent to grommunio.php just fine:

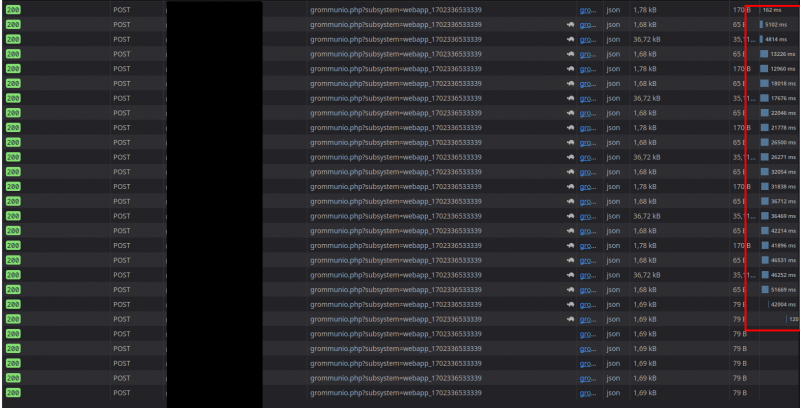

And they finished after around 13s - 50s (!!!). If thats the case while NOBODY else is using the system and it's idling around I can imagine that the whole system just breaks as soon as more than one person is using the web client 😂😅

I know those kind of "problems" from Nextcloud where the only solution was to increase the number of PHP pool workers. I tried it here but it didn't change anything so it must be a database request or something else which just takes so long on each request.

The only solution which comes to my mind is to abort PHP requests as soon as someone clicks on another folder. Because without this I can just queue lots of requests on the server which is just slowing down the whole machine for everyone. And as I already clicked on another folder the old requests is useless as soon as it finishes (after 30-45s 😂) because I already clicked on five other folders in the meantime.

I increased the nginx timeout to 120s for now – let's see what the next day in production brings.

Will ask for support from Grommunio GmbH here as this should be solved somehow.

EDIT: As the pictures are just too small here (how bad) I uploaded them here again: https://next.sweetba.se/s/ZCn353zSJoaCBsc